There has always been fear about new technologies, argue GSK’s leading AI experts Kim Branson, Robert Vandersluis and Jeremy England, but that shouldn’t hold us back from realising the potential of Artificial Intelligence (AI). Here, they explain how we can reap the rewards of this powerful technology in a responsible and ethical way – and accelerate the delivery of new medicines and vaccines to patients.

Fear of AI is nothing new.

In fact, it can be traced back to the earliest stages of AI development during the late 20th century, when the promise of intelligent machines simultaneously fascinated and alarmed futurists.

But that didn’t stop it from progressing, and the 21st century has seen the emergence of new AI technologies that demonstrate unprecedented capabilities. At the forefront of this lies the sudden but significant spike in the capabilities of large language models – most famously showcased through the abilities of ChatGPT.

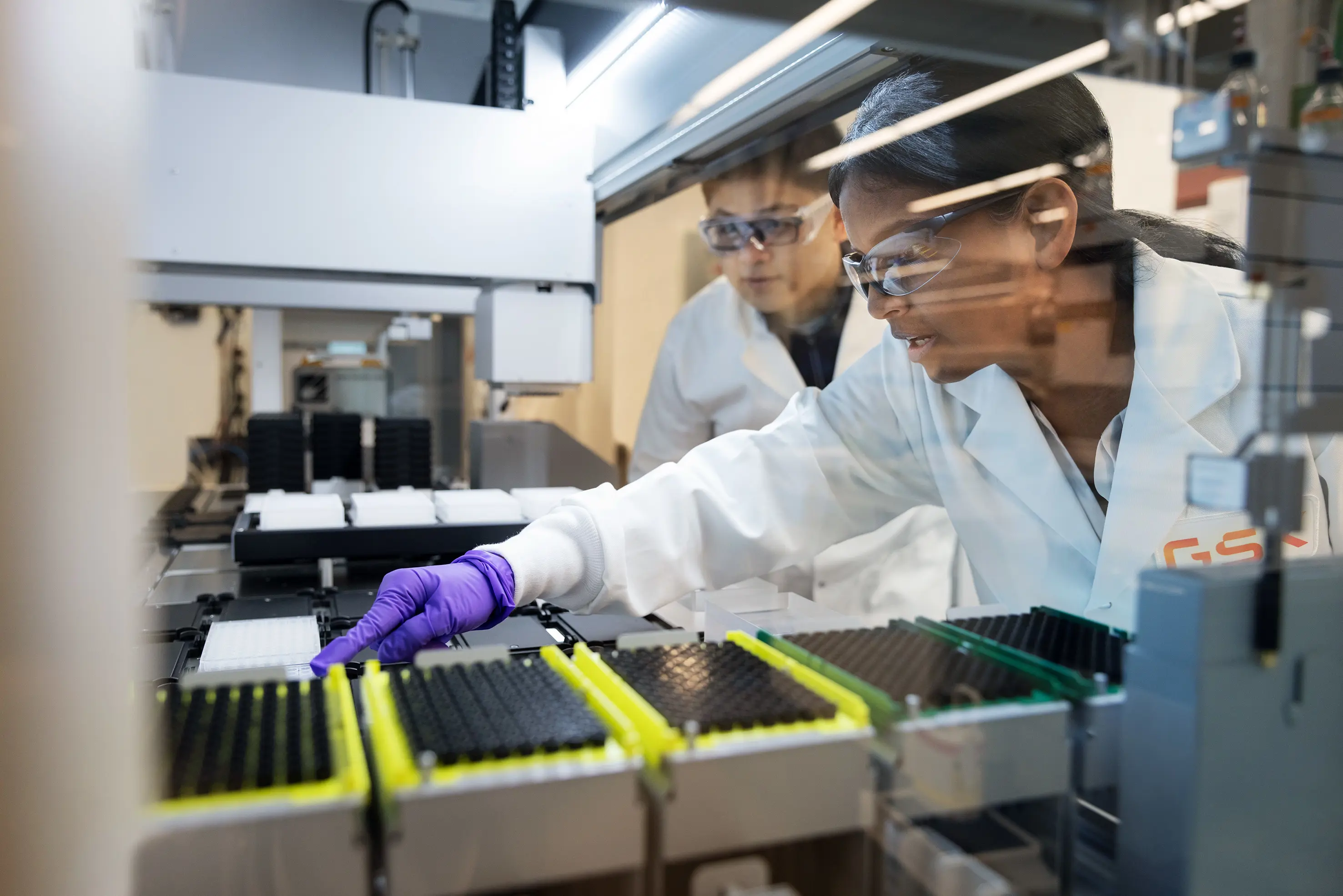

Trained using huge amounts of data, such models have introduced a step change in the reasoning and productivity power afforded by AI. At GSK, AI means we can build a more complete picture of human health and disease. It allows us to generate and process vast amounts of biological and clinical data so that we can uncover complex patterns and trends that help guide drug discovery and development – from early stage research through to clinical trials.

Yet the rapid evolution of these technologies has also raised questions about accountability – and fed into broader concerns around job displacement, and the ability of AI to match or even surpass human intelligence. In reality the technology falls short of AI that has intellect, self-awareness and consciousness at a level equivalent to humans – currently and for the foreseeable future.

Even so, these fears have led to demands to halt AI development altogether – some of them without a critical examination of the potential negative consequences to society this could provoke. Concerns have also influenced regulations such as that of the EU AI Act, which will impose tight restrictions on AI development across the entire EU economy – a move that has been met with some criticism.

It is our belief that calls to halt or significantly delay progress across all areas – including AI use in healthcare – may risk doing more harm than good.

Realising the benefits

Placing undue limitations on AI development could distract from real challenges that exist because of improper use and creation. For example, AI has been used to spread misinformation, and some of it trained with human biases and discriminatory values that could impact the data it produces.

It could also deny potential societal benefits to humanity. Rather than replace people in the workplace, for example, AI could greatly improve productivity and even create better paid, more specialised jobs if utilised effectively.

In healthcare, AI is transforming diverse functions, improving administrative workflows, surgical safety, health monitoring, precision medicine and preventive care. According to Harvard’s School of Public Health, using AI to make diagnoses may reduce treatment costs by up to 50% and improve health outcomes by 40%.

The AI-powered systems that we are currently developing at GSK enable large-scale molecular data to be managed and analysed so that we can better identify causal disease associations and identify new therapeutic strategies, accelerating the discovery of potential drugs, and ultimately getting vaccines and medicines to patients who need them faster than we’ve ever been able to before.

Such remarkable capabilities already have the power to save countless lives. We can only begin to imagine what we can achieve for patients in the future if such progress is allowed to continue unrestrained by knee-jerk restrictions.

A fine balance

So, the question arises: how do we balance the risks of developing AI with the incredible benefits?

We believe the key lies in putting human accountability at the centre of the technology and acknowledging that an AI algorithm or system cannot replace human judgment.

Tools need to be designed, deployed, and used with good intention. The best way to achieve this is to bake safe and ethical values and practices into every stage of development. Top-down governance and independent regulation could provide human oversight and hold individual users and developers to account.

Human interpretation of AI-generated predictions and validation of the results is also essential to ensure that ethical and safety standards are upheld. In healthcare, there is already a huge safety net in place for drug development. New medicines and vaccines undergo rigorous testing to ensure that they are safe and effective for patients. Now, we need that same rigor to be applied the evidence generated by AI in healthcare so it can be managed responsibly.

Appropriate governmental regulation is also needed to weigh up potential risks, protect public safety and manage the rate at which AI technologies are integrated into society.

Rather than blanket regulations as seen in the EU AI Act, we advocate for a more sophisticated approach where sector-specific regulators – such as existing health regulators – are empowered to establish regulations that balance unique risks and opportunities in each domain.

Instead of succumbing to thinking in simplistic extremes, we must instead rise to the challenge of safely and effectively harnessing technological progress to best serve the interests of individuals and society.